‘Nature’ publica un traballo pioneiro sobre fisión nuclear no que participa o IGFAE

12.05.2021

Un novo traballo liderado polo Instituto Galego de Física de Altas Enerxías (IGFAE) propón unha nova estratexia, baseada en computación cuántica, para simular procesos físicos no Gran Colisor de Hadróns (LHC)

O Modelo Estándar (SM) de Física de Partículas é a teoría máis exitosa e aceptada para describir as partículas elementais que compoñen o universo e a forma na que interaccionan a través da forza nuclear forte, débil e electromagnética. Na linguaxe do Modelo Estándar, a natureza descríbese en termos de campos fundamentais que se estenden polo universo. Un campo pode entenderse como a distribución da temperatura dentro dunha habitación ‒o universo‒, con lugares onde esta é moi alta ou baixa, que corresponden no SM a puntos do Universo onde se atopa unha partícula. Este modelo foi e segue sendo amplamente probado en varios experimentos distribuídos por todo o mundo. Quizá o máis famoso sexa o Gran Colisor de Hadróns (LHC, polas súas siglas en inglés) do CERN, en Xenebra, onde en 2012 observouse por primeira vez a última peza do Modelo Estándar que faltaba por verificar: o bosón de Higgs.

O LHC pon a proba o SM acelerando protóns a unha velocidade próxima á da luz nun anel de 27 km para facelos colidir despois. Dado que os protóns están compostos por outras partículas elementais, rompen tras chocar e producen unha plétora de estados finais ‒coma se golpeásemos bólas de billar a gran velocidade‒ que os detectores do LHC miden e compáranse con simulacións teóricas. Esta práctica foi moi exitosa, pero cando se aumenta a precisión dos datos experimentais, por exemplo, para buscar nova física máis aló do Modelo Estándar, as capacidades computacionais dos ordenadores actuais son limitadas.

Con todo, como se sinalou por primeira vez no traballo fundacional de Jordan, Lee e Preskill (JLP), estas dificultades técnicas poden superarse, en principio, representando os campos asociados ás partículas do Modelo Estándar en termos de bits cuánticos (qubits) e simulando as súas interaccións mediante un ordenador cuántico. Aínda que este enfoque é, en teoría, exponencialmente máis rápido que o mellor algoritmo de simulación clásico coñecido, a cantidade de qubits necesarios supera de sobra o número previsto que estará dispoñible nos computadores cuánticos a curto prazo, o que impide a súa aplicación nos próximos anos. Por iso, é moi interesante considerar outros algoritmos cuánticos para simular os procesos do LHC nun futuro próximo.

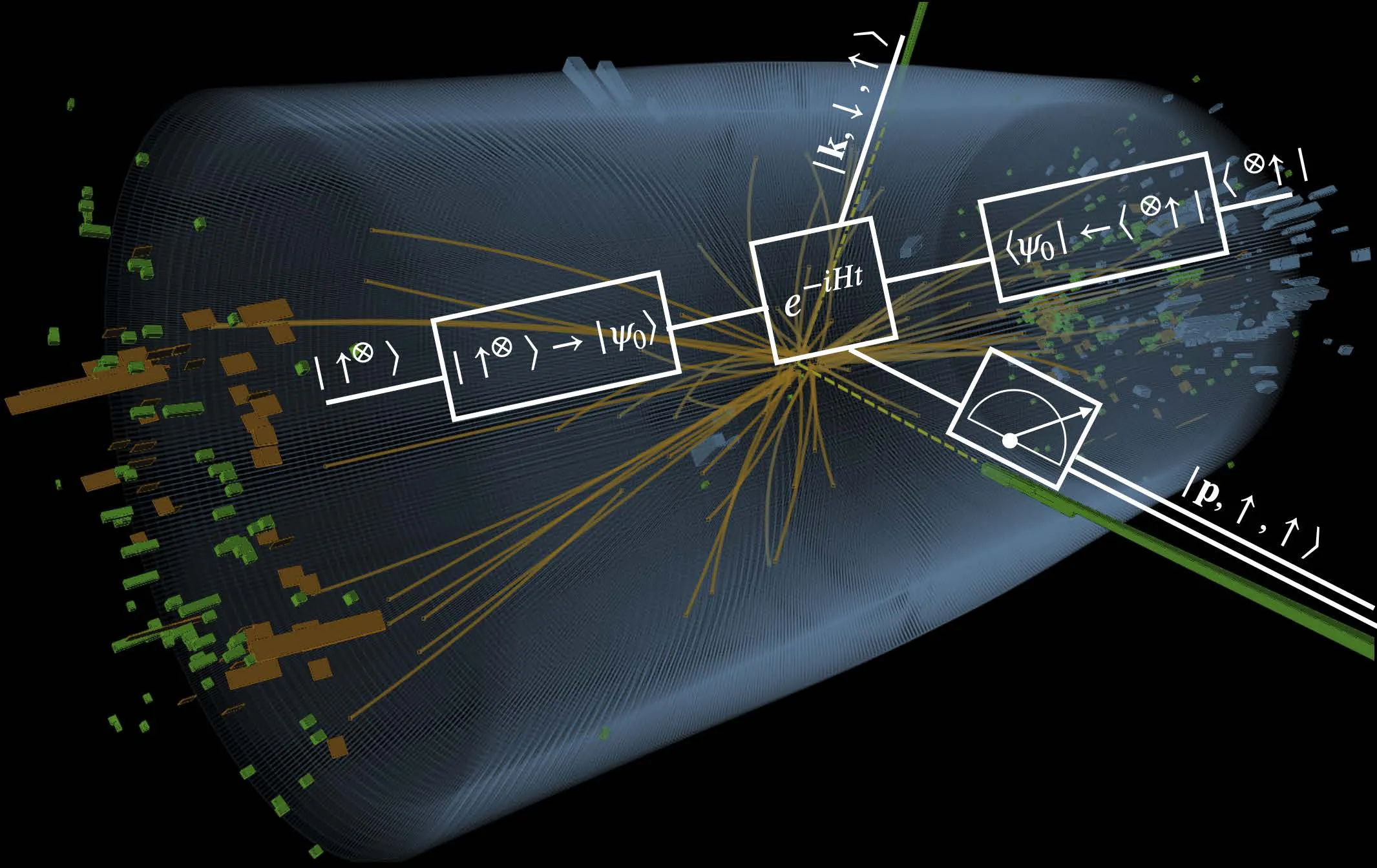

Agora, un novo estudo publicado recentemente na revista Physical Review A polo investigador predoutoral do IGFAE João Barata, xunto con Niklas Mueller (Universidade de Maryland), Andrey Tarasov (Universidade do Estado de Ohio e Centro para as Fronteiras da Ciencia Nuclear na Universidade de Stony Brook) e Raju Venugopalan (Laboratorio Nacional de Brookhaven), propón unha estratexia nova para simular a dispersión de partículas de alta enerxía, motivada fisicamente polo modelo de partones de Feynman. Nese modelo, cando os protóns coliden a alta enerxía, poden verse como un conxunto de partículas fundamentais menos enerxéticas. Así, en lugar de describir os campos dos protóns en termos de bits cuánticos, é máis sinxelo asignar directamente as partículas aos graos de liberdade dispoñibles no computador cuántico. Por tanto, mentres o número de partículas sexa pequeno, espérase que este enfoque sexa o natural para describir as colisións producidas no LHC.

“Aínda que a súa posta en práctica require de máis recursos que os dispoñibles nos ordenadores cuánticos actuáis, ofrece nalgúns aspectos importantes unha vantaxe directa sobre a proposta de JLP para simular a dispersión de alta enerxía”, explica João Barata, quen está realizando a súa tese doutoral no IGFAE grazas a unha bolsa INPhINIT da Fundación “la Caixa”. “Por exemplo, utilizando esta “imaxe de partículas”, o cálculo nun computador cuántico é notablemente similar aos protocolos de medición utilizados nos detectores do LHC. Ademais, este algoritmo pode aplicarse directamente aos cálculos teóricos máis estándar dos procesos que rexen o Modelo Estándar, polo que esperamos que poida atopar moitas máis aplicacións máis aló deste traballo”.

As aplicacións de computación cuántica a análise de datos experimentais e cálculos teóricos en Física de Altas Enerxías, Astrofísica e Física Nuclear é un campo emerxente en todo o mundo, de crecemento exponencialmente rápido e con gran interese no IGFAE, onde investigadores están a dirixir os seus esforzos cara aos retos da segunda revolución cuántica.

Referencias:

J. Barata, N. Mueller, A. Tarasov, R. Venugopalan, Phys.Rev. A, 103 (2021), 042410. doi.org/10.1103/PhysRevA.103.042410

Imaxe: Ilustración dunha colisión de dous fotóns no experimento CMS do CERN durante a procura do bosón de Higgs en 2011 e 2012. O “circuíto” sobreimpreso representa o mesmo proceso que se simula nun computador cuántico: os recadros e as liñas son as partes deste circuíto (como se tería nun circuíto eléctrico) e as fórmulas representan a operación física que se realiza. Crédito: CERN