A new study led by the Instituto Galego de Física de Altas Enerxías (IGFAE) proposes a novel strategy, based on quantum computing, to simulate physical processes at the Large Hadron Collider (LHC).

The Standard Model (SM) of particle physics is the most successful and accepted theory for describing the elementary particles that make up the universe and the way they interact through the strong, weak and electromagnetic nuclear force. In the language of the Standard Model, nature is described in terms of fundamental fields that extend throughout the universe. A field can be understood as the temperature distribution within a room ‒the Universe‒ with spots where it is very high or low, corresponding in the SM to points in the Universe where a particle is found. This model has been and continues to be extensively tested in several experiments around the world. Perhaps the most famous is the Large Hadron Collider (LHC) at CERN in Geneva, where the last remaining piece of the Standard Model to be verified ‒the Higgs boson‒ was observed for the first time in 2012.

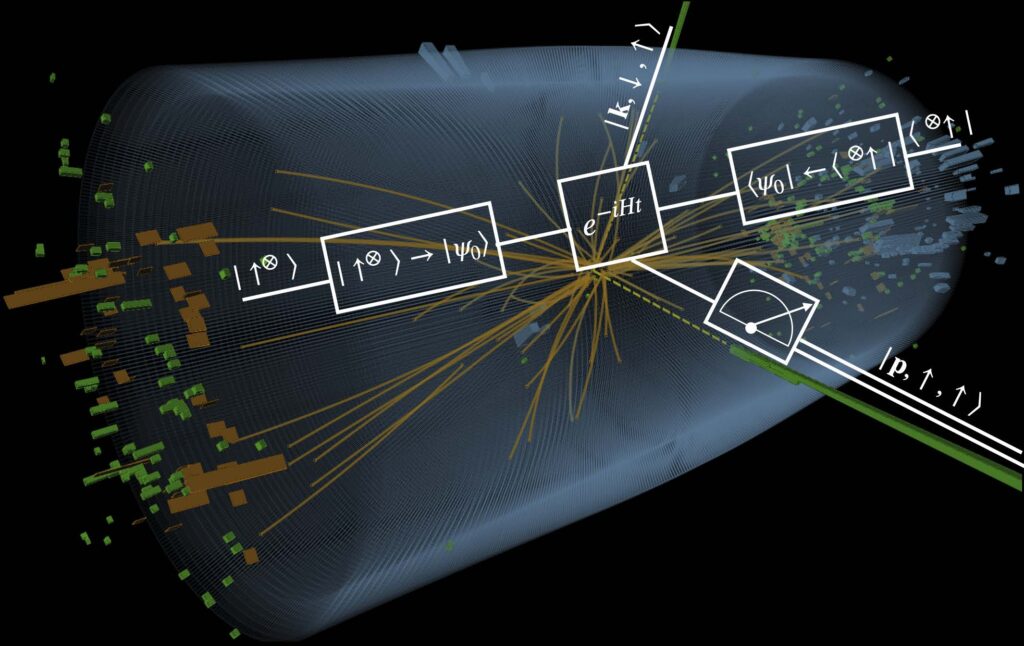

The LHC tests the SM by accelerating protons close to the speed of light in a 27 km ring and then smashing them against each other. Since protons are composed of other elementary particles, they break apart and produce a plethora of final states ‒like hitting billiard balls at high speed‒ which are measured by the LHC detectors and compared with theoretical simulations. This practice has been very successful, but when increasing the precision of experimental data, for example to search for new physics beyond the Standard Model, the computational capabilities of today’s computers are limited.

However, as first pointed out in the seminal work of Jordan, Lee and Preskill (JLP), these technical difficulties can, in principle, be overcome by representing the fields associated with Standard Model particles in terms of quantum bits (qubits) and simulating their interactions using a quantum computer. Although this approach is, in theory, exponentially faster than the best-known classical simulation algorithm, the number of qubits required far exceeds the expected number that will be available on quantum computers in the short term, preventing the implementation of such an approach in the next years. Thus, considering other quantum algorithms to simulate LHC processes that come closer to a full implementation in the near future is off extreme interest.

Now, a new study recently published in the journal Physical Review A by IGFAE predoctoral researcher João Barata, together with Niklas Mueller (University of Maryland), Andrey Tarasov (Ohio State University and Center for Frontiers in Nuclear Science at Stony Brook University) and Raju Venugopalan (Brookhaven National Laboratory), proposes a novel strategy to simulate high-energy particle scattering, physically motivated by the Feynman parton model. In that model, when protons collide at high energy, they can be seen as a collection of less energetic fundamental particles. Thus, instead of describing the proton fields in terms of quantum bits, it is easier to directly assign the particles to the degrees of freedom available in the quantum computer. Therefore, if the number of particles is small, this approach is expected to be the natural one to describe the collisions produced at the LHC.

“Although its implementation still requires more resources than those available in current quantum computers, it offers in some important aspects a direct advantage over the JLP approach to simulate high-energy scattering,” explains João Barata, who is doing his doctoral thesis at the IGFAE thanks to an INPhINIT fellowships from “la Caixa” Foundation. “For example, using this “particle image”, the calculation on a quantum computer is remarkably similar to the measurement protocols used in the LHC detectors. Moreover, this algorithm can be directly applied to the more standard theoretical calculations of the processes that govern the Standard Model, so we expect that it will find many more applications beyond this work”.

The applications of quantum computing to experimental data analysis and theoretical calculations in High Energy Physics, Astrophysics and Nuclear Physics is an emerging field worldwide, growing exponentially fast and with great interest at the IGFAE, where researchers are directing their efforts towards the challenges of the second quantum revolution.

References:

J. Barata, N. Mueller, A. Tarasov, R. Venugopalan, Phys.Rev. A, 103 (2021), 042410. doi.org/10.1103/PhysRevA.103.042410

Image: CMS Higgs Search in 2011 and 2012 data: candidate photon-photon event. The superimposed “circuit” represents the same process being simulated in a quantum computer. The boxes and the lines are the parts of this circuit (as you would have in an electrical circuit) and the formulas represent the physical operation being realised. Credit: CERN.